We are living in a time where new technologies are often presented as near magical solutions to all sorts of challenges. Politicians and the media often obscure the political dimension to technologies, leading to confusion (Box 1).

Box 1: What is meant by “tech”?

“Tech” is often used as a shorthand for digital machines and high-tech objects and industries, such as computers, airplanes or biotechnology.

But a technology (“tech” for short) is more accurately defined as a useful set of techniques brought together into a system and sustained over time – often, but not always, in a physical form. Using this definition we can see that in reality, all kinds of technologies are part of our everyday lives: Bicycles and clothes are technologies. So are crop rotation, firearms and nuclear power.

Viewed in this way, we can more clearly see that technologies have consequences for our common good built into them – but these consequences may be positive or negative. As a result, technologies need to be assessed. To evaluate technologies effectively, our definition of them must reject the common assumption that technologies are neutral tools, mere objects or processes that allow us to solve a problem or do something.

We believe that technologies often have political implications, and that power relations are often inadvertently (and sometimes intentionally) built into technological systems and the “tech” devices that arise from them, in a way that could lock in social injustices or endanger ecosystems.

From the hyped-up promises circulated in the media, one would think that technologies like drones, so-called artificial intelligence (AI), gene drive organisms and geo-engineering are going to be an inevitable part of our future. In reality, they are the product of scientism – a belief system that overestimates the importance of physical science, and the belief that it offers the answers to all our worst difficulties (Box 2).

Box 2: Scientism to pluriverse

To assess a technology effectively, the knowledge on which the evaluation is based must be reliable and understood by those undertaking the assessment. Often, expertise in the natural sciences (physics, chemistry and biology) are given undue weight in assessing new technologies, leading them to dominate other forms of knowledge and judgement that may be just as relevant, such as ecology and ethics. All knowledge and meaning-making, including that which is derived through the natural sciences, can only come from human beings, who can be limited in the perspectives they include and may lack the humility to acknowledge their own ignorance or uncertainty.

Scientism is a belief system that puts too high a value on the natural sciences (particularly physical science) in comparison with other branches of learning or culture, including other knowledge systems from outside Western science. Drawing on the work of philosopher Mary Midgley, we suggest scientism is a three-fold belief system which maintains that:

1) All questions that are raised in a process involving non-scientists are either meaningless or can be answered by science.

2) Science has authority because it is based on empirical evidence – scientific claims will, therefore, always overrule claims made from outside science.

3) Science provides the ultimate account for the basis of reality – the ultimate metaphysics – but it substantively changes the questions, getting to the correct ones, rather than the meaningless ones that come from outside science.

Yet, by denying the value of other ways of looking at the world, believers in scientism are setting up an alternative philosophical system – one that its adherents believe only scientists should be permitted to explore. The danger of scientism is that discussions of issues fundamental to our futures, such as human rights or control of technology for the common good, become issues solely for natural scientists, who usually lack any background in thinking outside their own narrow disciplines.

The tendency among many scientists and technologists towards a belief in scientism needs to be kept in check. If not, scientism can lead to scientific programmes that breach common sense ethical standards. Perhaps the best-known example is eugenics – an idea popularised by mainstream British scientists in the early twentieth century, along with prominent public figures such as George Bernard Shaw, Marie Stopes and John Harvey Kellogg. Eugenic ideas underpinned both the Nazi Holocaust and the US Tuskegee Syphilis Study (1932-1972). In the latter, African-American people were told that they were receiving free medical treatment from the federal government of the United States, when in fact they were part of an experiment to see what would happen if syphilis was left untreated.

More recently, scientism has been the belief system adopted by many of the evangelists of ‘artificial intelligence’ (AI), some of whom call themselves transhumanists and ecomodernists. While these tech-evangelists can be found in many elite institutions, they are particularly concentrated in Silicon Valley.

While embracing science as a rational process of making sense of the world, technology assessment rejects the beliefs that underpin scientism. Instead it embraces an approach based on precaution, humility among all scientists and engineers, and acknowledgement of areas of ignorance. It recognises the need for ethics, openness about uncertainties and the embracing of multiple perspectives.

Indian scholar Shiv Visvanathan has been one of many calling for “cognitive justice” – the recognition that a plurality of knowledge exists. He is one of many who have endorsed the use of the term “pluriverse” to challenge scientism’s hubris that only one universal knowledge – that of the natural sciences – should override all other understandings of reality.

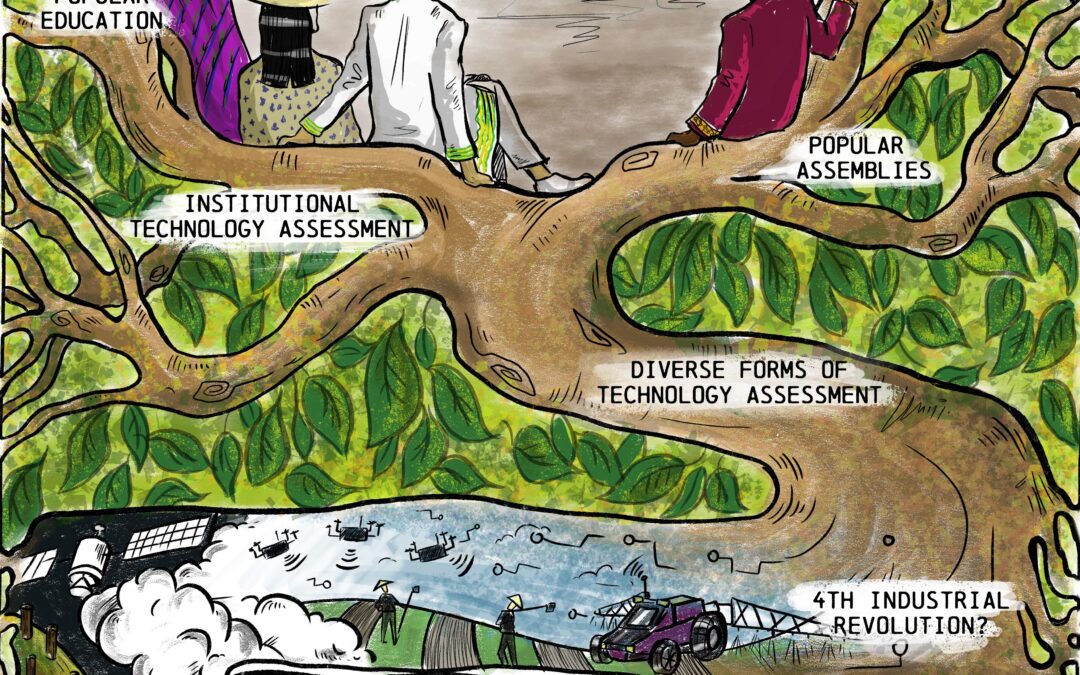

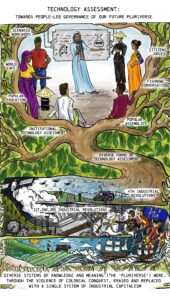

Taking their lead from industries such as agrichemicals, computer design and robot manufacturers, today’s technology decision makers are promoting what they call a “4th industrial revolution” that, if successful, could complete the process in which every realm of life becomes controlled by armies, algorithms and corporate-controlled machines. The starting point of all technology assessment initiatives is that this technocratic future is not inevitable and that people have a right to collectively determine their futures.

In the past, these flawed policy processes have often had chaotic and controversial results.

Examples of technologies that were initially permitted but eventually banned or restricted include a range of pesticides, genetically modified crops (see article on Molecular Manipulations [insert hyperlink]) and some nuclear weapons technologies. These bans and controls were only put in place after considerable loss of human life, damage to health or irreversible ecosystem damage had already occurred.

Though it can be used to assess existing technologies, the most effective use of technology assessment is when it is used to look forward – anticipating and preventing potential damage before new technologies are introduced (Box 3). Ideally, technology assessment would also influence the overall direction of scientific research and associated investments. In the field of agriculture and food, for example, technology assessment could foster political support for peasant-led agro-ecological approaches, rather than synthetic chemicals and genetic engineering.

Box 3: The Collingridge Dilemma

A key purpose of most technology assessment (TA) processes is to address what has become known as the Collingridge Dilemma (after pioneering technology theorist David Collingridge), which is based on two facts:

- Influencing or controlling technological developments is easier at an early stage when their implications are not yet manifest.

- By the time we know these implications, they are difficult to change.

The dilemma we face has dimensions of both information and power: An information problem because impacts of a new technology cannot be easily predicted until it is extensively developed and widely used, by which time it may be too late; a power problem because controlling or changing the trajectory of a technology is difficult when it has become entrenched and locked in.

Technology assessment addresses the problem of a lack of information about a new technology by subjecting the claims of its proponents to critical public scrutiny, usually involving some kind of public forum, such as a popular assembly, citizens’ jury or scenario workshop. The best approaches to technology assessment also bring these different kinds of knowledge into political decision making at an early stage of the technology’s development. This means that policies can then be formed to ensure that the technology can be developed and governed, if it is developed at all, in the interests of all our futures.

Institutional Technology Assessment

People have been assessing and sometimes opposing technologies throughout recorded history and beyond. The Roman historian Suetonius describes how, when a mechanical engineer offered to carry some heavy columns to the Capitol cheaply using a labour-saving device, the emperor Vespasian “gave no mean reward for his invention but refused to make use of it saying, You must let me feed my poor commons.”

In nineteenth century England, the Luddites and Swing Rioters broke machinery in a context where only the richest men had the vote and most male workers would not have a vote for another hundred years. These protests occurred in parallel with similar movements that emerged in other countries, such as France and India, attempting to resist harmful technologies that were being violently imposed through the first Industrial Revolution.

Technology assessment as an institutional activity emerged in the 1960s, being first institutionalised as part of the United States Congress in 1974. This Office of Technology Assessment (OTA) provided congressional members and committees with objective and authoritative analysis of complex scientific and technical issues. It was abolished by Republican members of Congress, then led by staunch opponent of government regulation, Newt Gingrich. Despite this, the OTA model has been copied in European countries, such as Germany and Switzerland. The fundamental drawback of most institutional approaches is that they systematically exclude the knowledge of people outside elite groups of professionally trained scientists, corporate stakeholders and government policymakers. Without effective scrutiny of these often-blinkered elites by people whose expertise comes from life experience, rather than professional training, the conclusions reached can reflect the group-think that often exists within these elite groups.

If undertaken competently (Box 4 & 5), approaches to technology assessment that adopt the principles of participatory action research can help ensure that new technologies are developed (or rejected) and old technologies rehabilitated (or jettisoned) based on the informed views of people in all their diversities. Greater inclusion of knowledge gained by lived experience, which often has the advantage of having been developed and used over many generations, is bound to produce better long-term policies than blindly trusting professionals who may have a vested interest in supporting the latest wave of new technologies.

Box 4: Prajateerpu, India

In response to an attempt, funded by the World Bank and British government, to displace farmers from the land in the Indian state of Andhra Pradesh via a strategy called Vision 2020, a coalition of Indian grassroots-led organisations and action researchers from India and the UK undertook Prajateerpu (‘people’s verdict’) – a hybrid approach to deliberative democracy called a citizens’ jury and another method called the scenario workshop. The jury was made up mainly of women farmers, with people from Dalit and Indigenous groups in a majority. At the time, Golden Rice, the marketing term used for rice that had been fortified with Vitamin A through genetic modification, was being promoted by the biotechnology industry as a pioneering way of addressing malnutrition. When an expert spoke in support of Golden Rice, the farmers pointed out that it was the very dominance of rice in the diet, to the exclusion of vegetables and pulses, that was threatening malnutrition, not the lack of Vitamin A alone. The report of this participatory action research process had a major impact regionally, nationally, and internationally. The UK government’s response was to claim that the process was biased. Under pressure from the UK government, the two institutes where the authors were based withdrew the report, without consulting with the Indian organisations. The report was later reinstated, but the reputations of both the organisations that had first published and then undermined the report were damaged: they lost credibility amongst social movements for their lack of independence from government.

The evaluation of technologies by society is more urgent than ever right now because:

- The speed at which the products of industrial science and technology are being applied is accelerating, particularly in the wake of the COVID-19 pandemic and with the threat of global climate change.

- A small number of transnational corporations are controlling key technologies and resources on a global scale, beyond the sovereignty of states and decision making in the public interest.

- More and more areas of nature and culture are being commodified through new technologies, while new speculative financial instruments are promoting their privatisation and the hoarding of resources by private actors.

- The impact that corporate-controlled science and associated technological developments are having on already threatened livelihoods, women’s rights, fragile environments and global crises are increasingly evident.

- Governments’ and societies’ capacity to monitor and regulate emerging technologies is currently insufficient.

For the sake of all humanity, we need technology assessment so that we can make informed decisions to protect rights and livelihoods and enhance the well-being of people and our planet.

There is no simple formula that can be simply applied to the assessment of technologies. Just as it is too simplistic to think of a single technology solving a complex social or environmental problem, so do processes of assessment have to address the complexity that underpins communities, ecosystems and issues of justice.

Box 5: Latin American Network for the Assessment of Technologies

The Latin American Network for the Assessment of Technologies, called Red TECLA, is a network of organisations and individuals who challenge the technological tools and processes that are being imposed upon peoples without consideration of their impacts on life, nature and the livelihoods of peoples, communities and collectives.

TECLA was established in 2016 and has members (individuals and organisations) from Argentina, Uruguay, Chile, Ecuador, Brazil, México, and international networks, movements and civil society organisations, including La Via Campesina Latin America, World March of Women, Friends of the Earth Latin America and the Caribbean (ATALC), Network for a Transgenic-free, GRAIN and ETC Group.

It has an International Coordinating Committee and an Advisory Group of critical scientists and scholars. TECLA’s main activities to date have been:

- Discussion about what science and technology is and the context in which they operate, in order to understand their underlying logic, promote the integration of diverse knowledge systems and foster debate beyond the dominant scientific domains.

- Review of the technological horizon, in order to know what is coming, what potential social, economic, environmental impacts new technologies might have, both separately, and in convergence.

- Establishing case studies about specific problems that aid understanding of complex situations and provide information to those affected by the technologies.

- Interaction with policymakers at all levels, to present critiques and technological proposals from grassroots organisations, countries and regions.

- Production of popular materials: booklets with case studies, as well as analysis and reflections about technologies drawn from TECLA meetings, assemblies and exchanges with other networks. The information produced by TECLA comes from its members and is written in an accessible style to reach the wide range of its members, from peasant communities to committed scientists.

By using participatory forms of technology assessment, Indigenous peoples, local communities and humanity in general could reclaim the ecologically appropriate practices that have often been erased during the eras of colonialism and that are still under attack today.

It challenges the false notion – which emerged from the European enlightenment – that the thinking underpinning “modern” technology should replace Indigenous and peasant understandings of humanity and the natural world with which are all entwined.

The Future: Technology Assessment Platforms

ETC Group has supported the emergence of a number of technology assessment platforms (TAPs – see Box 6), each of which are underpinned by a set of three core values:

1) People’s control for the common good: since technologies alter power in society, technology assessment should aim to be an open process whereby people are able to develop and exert control over technologies. These actions will be directed towards the common good, rather than the pursuit of private interests and profit.

2) Precaution: technology assessment should always begin with a precautionary principle, acknowledging some evidence of concern for a risk or any harms caused by a new technology is enough to warrant the oversight and governance of that technology.

3) Autonomy: technology assessment can help protect the rights, autonomy and sovereignty of marginal or oppressed peoples when used as a tool to ensure their free prior and informed consent, with the ultimate right of peoples to say “no” to new and existing technologies.

TAPs also operate on the basis of three sets of principles: the precautionary principle (care); the right to know (transparency) and the right to say ‘no’ (consent) as well as those of participatory action research.

- i) Care: the principle of precaution

Precaution is just about being careful and taking care. Today powerful interest groups promoting new industrial technologies misleadingly try to suggest that an attitude of care and precaution is somehow unscientific or anti-technology – that it will arrest the sort of entrepreneurial risk-taking needed for modern economies by enacting bans. But the precautionary principle, the logic of which lies behind the process of technology assessment, does not necessarily mean a ban on new technologies or stopping things being researched and understood. Enshrined in the legal systems of numerous countries, it simply urges that time and space be found to get things right using an appropriate range of perspectives. If in doubt, the enactment of the precautionary principle would tend towards policies that prevented the release of a potentially harmful technology.

To see the value of this, we can start by considering history. Take, for example, asbestos, lead, benzene, pesticides, ozone-depleting chemicals or industrial-scale fishing. In all these areas of technology (and many more), early precautionary action was dismissed as irrational by governments, business and scientific establishments alike, who were fearful of losing advantage in an industrial race. They claimed there were no alternatives and that advocates of precaution were anti-science or against everyone’s need to benefit from Western development. Yet now, it is agreed on all sides of the debate that levels of risk were initially drastically understated and there were alternative pathways than the proponents of these technologies acknowledged at the time.

Applying the precautionary principle is also a means whereby those involved with science and technology can be reminded that innovation and progress is not a one-way race to the future. Instead, technological developments can take many paths. Though often concealed behind the banner of ‘science’, the development of each technology involves intrinsically political choices. Assessing the risk arising from a new technology requires an understanding of the nature of uncertainty. The precautionary principle states that where there is some evidence of potential threats to human health or the environment, scientific uncertainty about how strong that evidence is should not be a reason for those in power to avoid their responsibility to take action to avoid harm. Uncertainty does not compel a particular action, it merely reminds us that lack of evidence of harm is not the same thing as evidence of lack of harm. In other words, the crux of precaution lies in us taking as much care in avoiding the scientific error of mistakenly assuming safety, as we might in avoiding mistakenly assuming harm.

When it comes to uncertainty, it is not only difficult in practice to calculate some single definitive “sound scientific” or “evidence-based” solution, but these terms are often used in political ways to prevent questions by people with a wider range of perspectives than those with the narrow mindset of scientism. Good governance of science and technology means listening to questions from a range of expert perspectives, including those derived from lived experience, rather than solely relying on those from professional experts.

- ii) Transparency: the right to know

In our increasingly technologically complex world, there is a danger that almost all decisions of importance will be made behind closed doors. Experts connected to powerful organisations will make momentous choices using the justification that such judgements require specialised knowledge. The rest of us are excluded from influencing such decisions. The idea that the powerful technologies or science-based decisions that shape our lives are too complicated to involve the views of people outside a small group of experts can lead to power being handed to an ever-decreasing number of bureaucrats.

Regaining control over decisions that involve technologies requires helping a wider range of people to better understand the science and technological issues that are around them or are set to impact their own rights and living conditions. This includes making visible the presence of particular technologies (such as through labelling and awareness raising), creating easy to understand public information about their uses and abuses, sharing what is known about risks and uncertainties and acknowledging limits to knowledge about a technological development or scientific matter. This counters the myth of scientism – that scientific experts alone know best. In this way, everybody can bring their own knowledge and their own values and wisdom to bear upon how a technology is assessed and ultimately governed for the common good.

iii) Consent: the right to say “no”

The right to say “no” or the conditions for saying “yes” to a new technology has been enshrined in several key United Nations conventions as a process of free, prior and informed consent, often shortened to FPIC. These four initials collectively describe the collective right people should have to understand all relevant dimensions of scientific research and technological development without being pressured by those in authority, and to potentially say no to either the research or the technological development, if they believe there potentially could be negative impacts.

The emergence of TAPs, built from the bottom-up, contrasts with institutional initiatives, often organised by elites from the top-down, such as expert-led processes of technology assessment. In this way TAPs will enable local communities, particularly those groups in society whose knowledge has been marginalised, to take greater control over the process of technology development.